[论文粗读][NIPS21]Low-fidelity video encoder optimization for temporal action localization

summary

type

status

slug

category

date

tags

icon

password

comment

SLUGS

相关信息

- Author: Xu, Mengmeng; Perez Rua, Juan Manuel; Zhu, Xiatian; Ghanem, Bernard; Martinez, Brais

- Year: 2021

- Journal: Advances in neural information processing systems

个人总结

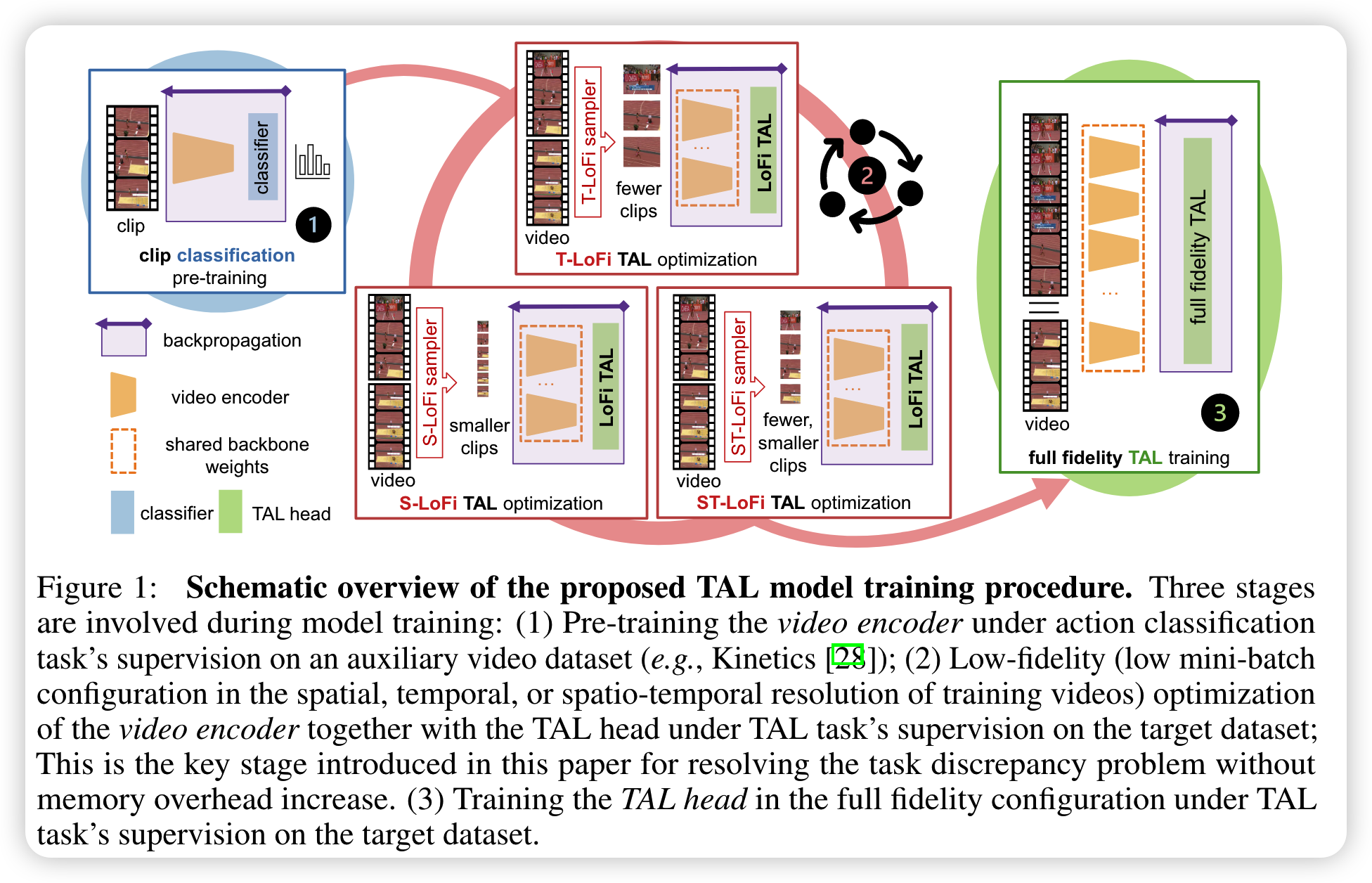

作者提出的问题清晰,分析深刻。作者总结发现(全监督)TAL任务都存在task discrepancy problem,因此作者参考object detection task中的思路,探索TAL上的end to end training。考虑到显存问题,借鉴文献[57] 《A multigrid method for efficiently training video models》的思路,针对TAL task,设计low-fidelity (LoFi) video encoder optimization method。

随记

开门见山,直接指出问题,在AC上训练的encoder直接用于TAL,会产生task discrepancy。 first optimizing a video encoder on a large action classification dataset (i.e., source domain), followed by freezing the encoder and training a TAL head on the action localization dataset (i.e., target domain). This results in a task discrepancy problem for the video encoder – trained for action classification, but used for TAL. Intuitively, joint optimization with both the video encoder and TAL head is an obvious solution to this discrepancy. However, this is not operable for TAL subject to the GPU memory constraints, due to the prohibitive computational cost in processing long untrimmed videos

提出的解决方案: we propose to reduce the mini-batch composition in terms of temporal, spatial or spatio-temporal resolution so that jointly optimizing the video encoder and TAL head becomes operable under the same memory conditions of a mid-range hardware budget. Crucially, this enables the gradients to flow backwards through the video encoder conditioned on a TAL supervision loss, favourably solving the task discrepancy problem and providing more effective feature representations

task discrepancy problem的含义: Specifically, the video encoder is trained so that different short segments within an action sequence are mapped to similar outputs, thus encouraging insensitivity to the temporal boundaries of actions. This is not desirable for a TAL model. We identify this as a task discrepancy problem.

作者仔细分析了问题所产生的原因:为了解决task discrepancy problem,一个容易想到的方法就是end-to-end training,如同object detection那样,然而模型处理的输入是untrimmed videos,会需要巨大的显存,这也是为什么two-stage optimization(AC任务优化encoder后,冻结encoder,TAL任务损失优化TAL head)作为通用且可行的选择。另外一方面,two-stage optimization这种transfer learning方式主要处理data distribution shift problem,而不是task shift problem。 Indeed, jointly optimizing all components of a CNN architecture end-to-end with the target task’s supervision is a common practice, e.g., training models for object detection in static images [18, 46, 38]. Unfortunately, this turns out to be non-trivial for TAL. As mentioned above, model training is severely restricted by the large input size of untrimmed videos and subject to the memory constraint of GPUs. This is why the two-stage optimization pipeline as described above becomes the most common and feasible choice in practice for optimizing a TAL model. On the other hand, existing transfer learning methods mostly focus on tackling the data distribution shift problem across different datasets [70, 50], rather than the task shift problem we study here. Regardless, we believe that solving this limitation of the TAL training design bears a great potential for improving model performance.

怎么做的? It is designed to adapt the video encoder from action classification to TAL whilst subject to the same hardware budget. This is achieved by introducing a simple strategy characterized by a new intermediate training stage where both the video encoder and the TAL head are optimized end-to-end using a lower temporal and/or spatial resolution (i.e., low-fidelity) in the mini-batch construction (p. 2)

方法框架

部分实验

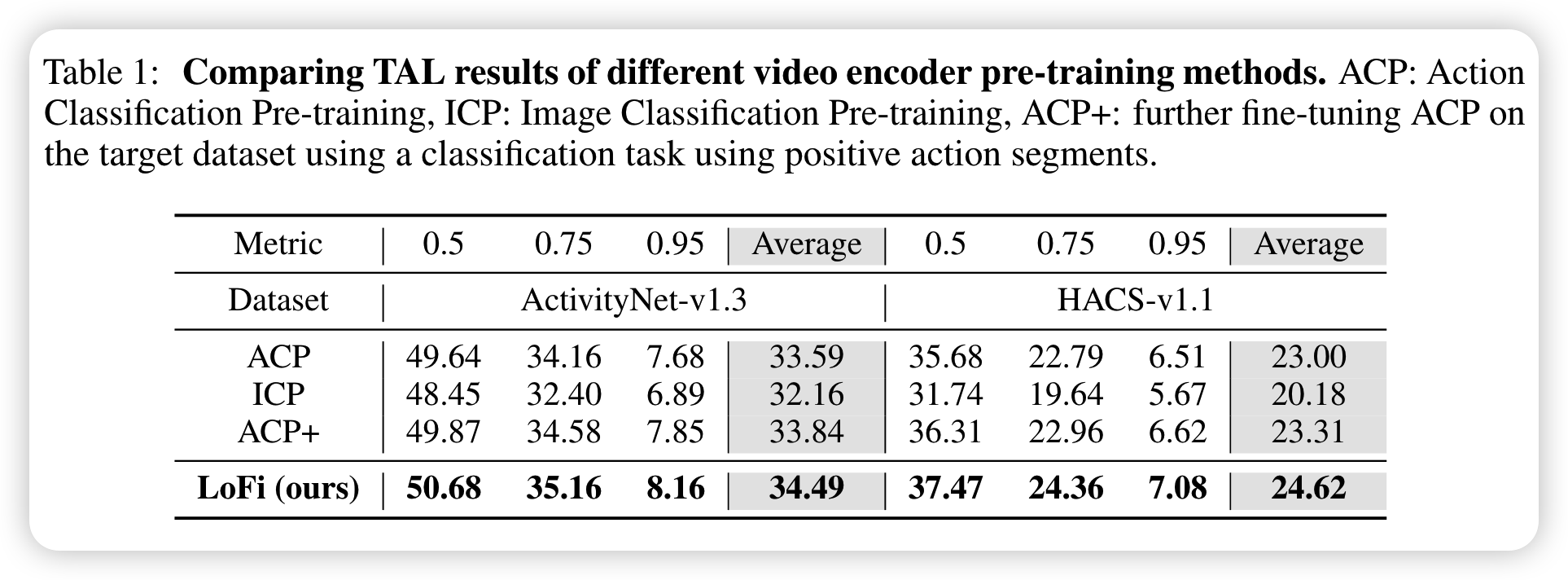

不同pre-trainning的对比

结论:

- 结果的第1-2行是在不相关数据上进行pre-training,第3行是在相关数据上训练,表明了在pre-training on relevant data上的重要性。

- 第2行是image classification pre-training的结果,对比其他方法结果,可以看出处理data distribution shift还是有必要的。

- 从最后一行结果可以看出,作者提出方法的有效性。二最后两行的对比表明,处理task-level difference是有利于模型性能的提升的。

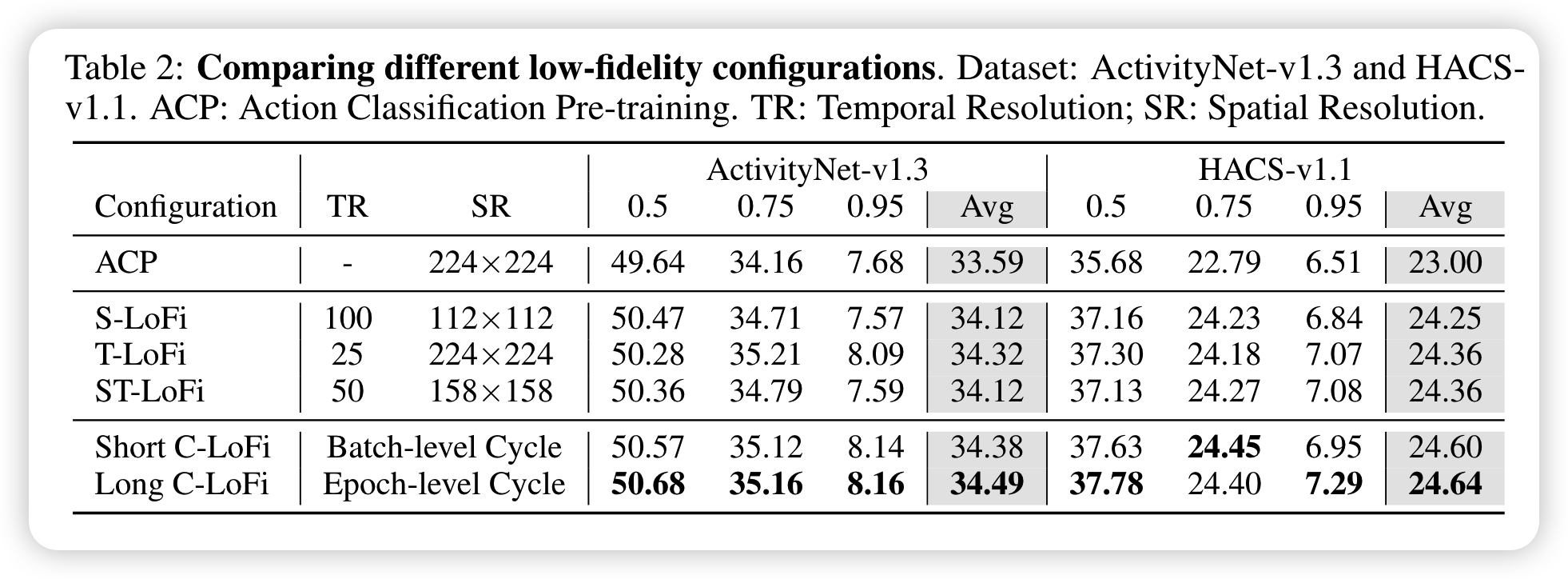

不同方法设置的对比

结论:

- 每一种LoFi configuration都能提升一定性能,说明:spatial和temporal dimension上进行处理都能提升LoFi方法的性能。

- 结合设计的cyclic training strategy也有性能提升。

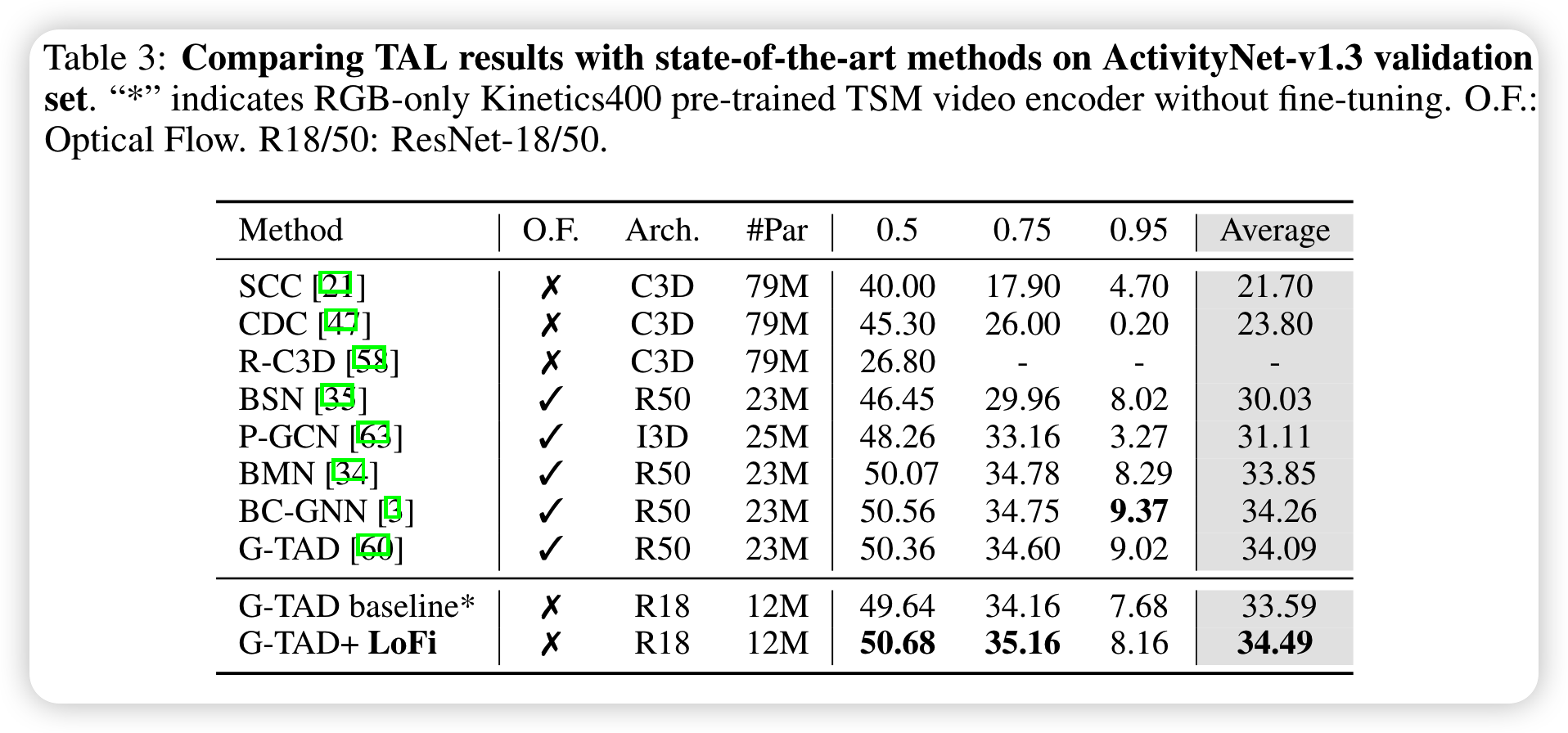

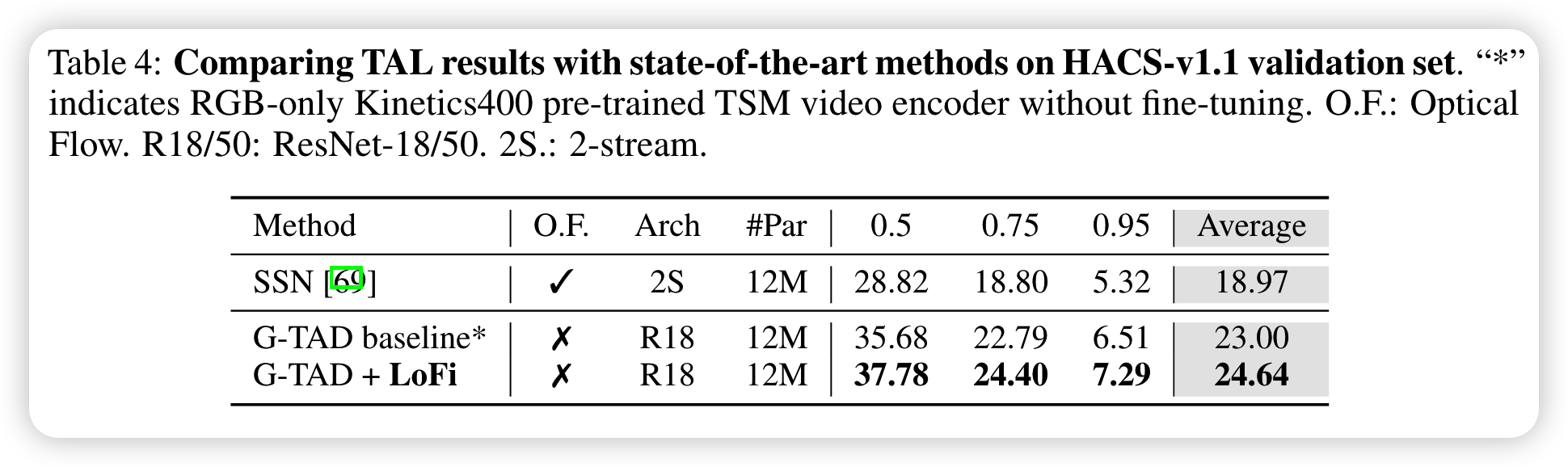

与SOTA对比

从中可以看出:作者的方法只使用RGB stream,就打败了许多tow-stream based SOTA方法。

[57] Wu, Chao-Yuan, et al. "A multigrid method for efficiently training video models." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2020.

Loading...